How to create a poker bot using Python

Legal & Ethical Disclaimer: This content is for educational/research purposes only. Automated poker bots on commercial online poker sites may breach terms of service and may be illegal in certain jurisdictions. Please, verify local laws and policies of your poker applications or websites before applying any automatic poker software. This content will only focus on academic research, game theory applications, and educational AI development.

Introduction: My Experience in Poker AI Research

As a researcher interested in both artificial intelligence and game theory, I have spent much time thinking about poker AI and AI’s amazing successes in the past decade. In 2017, when I first learned about Carnegie Mellon University’s Libratus, which had just defeated a number of professional human players at heads-up no-limit Texas Hold’em, it became clear to me we were at a historical moment in AI. I became interested in how these systems worked and how potential implementers could learn from these successes.

Poker AI is one of the most complex areas in artificial intelligence research and uses the full range of techniques possible for a percentile imperfect information game – such as applied game theory, strategic reasoning with uncertainty, and opponent modeling. Unlike chess or Go, poker combines fewer rules, hidden information, bluffing, and social factors, which make it normal and similar to many decision problems we face in life.

The Huge Breakthrough: Moving from Libratus to Pluribus

The entire poker AI landscape shifted with these three major successes and, in turn, changed our perception of AI’s capabilities in imperfect information games.

Libratus: The Heads-Up Champion (2017)

Developed by Tuomas Sandholm and Noam Brown at Carnegie Mellon University, Libratus made headlines when it defeated four top professionals in a 20-day heads-up no-limit Texas Hold’em competition. In their publication in Science, Sandholm said: “Libratus doesn’t try to figure out how humans play. It develops a strategy that is provably optimal against any opponent” (Brown & Sandholm, 2019, Science).

The reason Libratus was successful was due to the fact it executed Counterfactual Regret Minimization (CFR) algorithms on enormous computation, running over 13 million core hours on the Bridges cluster at the Pittsburgh Supercomputing Center. What makes Libratus special is that it was able to compute strategies for the complete game tree of heads-up no limit Hold’em, which was presumed computationally intractable prior to their work.

DeepStack: Real-Time Strategic Reasoning (2017)

At the same time, researchers at the University of Alberta, led by Michael Bowling developed DeepStack, which was known to combine deep learning with game theoretic reasoning. “DeepStack is the first computer program to outplay human professionals at heads-up no-limit Texas hold’em poker,” said Bowling in their Science paper (Moravčík et al., 2017).

DeepStack’s innovation was that DeepStack could compute the strategy in real-time while playing, rather than having to pre-compute the entire game tree. This method made high-level performance much easier to realize.

Pluribus: Six-Player Poker Freedom (2019)

The biggest achievement was next, with Pluribus, developed again by the CMU team, that was the first AI to defeat human professionals at six-player no-limit Texas Hold’em. This is much more impressive, since multiplayer poker has substantially exponentially greater complexity than heads-up poker.

“The techniques that we developed for Pluribus can be applied more broadly to other strategic interactions, including auctions, negotiations, cybersecurity, and other domains,” said Sandholm in their later paper (Brown & Sandholm, 2019).

Technical Underpinnings: Understanding the Architecture of Modern Poker AI

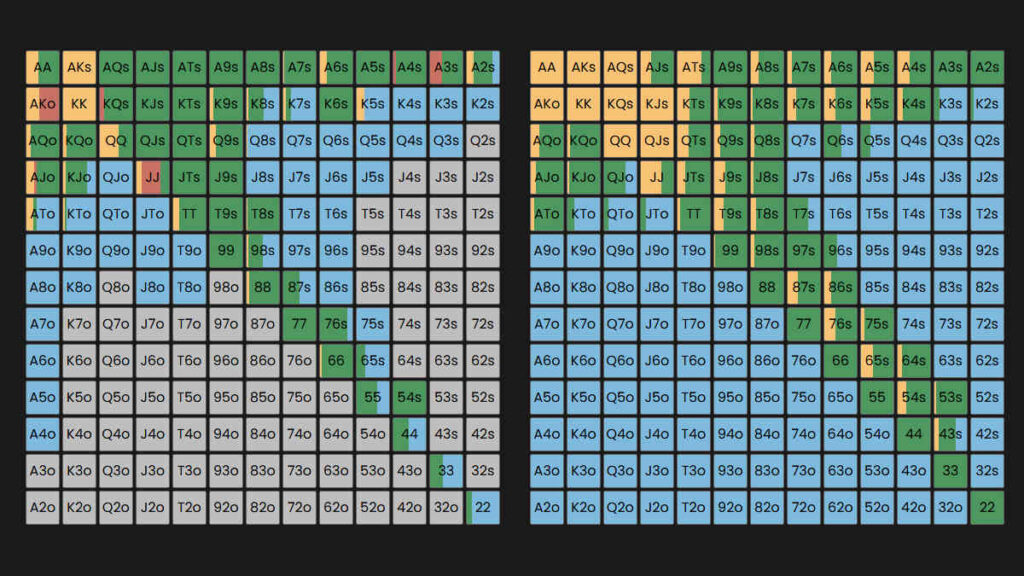

Game-Theoretic Optimal (GTO) Strategies

Modern poker AI solutions are fundamentally implemented with Game-Theoretic Optimal strategies which are defined mathematically as the ideal play that cannot be exploited by the opponent. According to professional poker player and coach Darren Elias, “GTO play provides a baseline strategy that’s unexploitable, but the real skill comes in knowing when and how to deviate from GTO to exploit opponent weaknesses.”

The actual mathematics rely on Nash equilibrium specifications, in which each player’s strategy is optimal given the strategies chosen by all other players. In poker terms, this involves achieving the best strategy such that in the long-run, no money can be lost, no matter the strategy chosen by the opponents.

Counterfactual Regret Minimization (CFR)

CFR algorithms are one of the important parts of modern poker AI, the algorithm operates and tracks “regret” while playing numerous iterations through the virtual game or gaming situation, where the algorithms strategy evolves as a function of “regret,” which is the difference between the reward received and the reward that could have potentially been earned if the player had played an action different from the action that was actually taken.

Recent developments have included Monte Carlo CFR (MCCFR), and Deep CFR which incorporate neural networks, whilst using traditional CFR where useful, that enable poker players to reasonably sample vast state spaces. From 2020-2025 meta-analysis published equivocated strong convergence and memory (Steinberger et al., 2019, Li et al., 2020).

Neural Network Integration

Many modern poker AI systems have utilized the deep learning element. Facebook AI Research recently published a new version of CFR called Deep CFR, which utilized neural networks in order to approximate regret and strategy functions while overall improving memory footprint considerably without the tradeoff of performance.

Neural network integration often includes:

- Value networks that can approximate expected value for each state of the game

- Policy or stochastic policy networks that define action probabilities for which will be taken

- Opponent modling networks that can estimate player-based behaviour trends

Real World Example: Making Your Own Educational Poker AI

Key Python Libraries and Frameworks

Through the current research and applied terms of poker AI, the use of some common use libraries and becoming standard libraries for poker AI development:

OpenSpiel: Google’s Multi-Game Framework

|

1 2 3 4 5 6 7 |

import pyspiel import numpy as np from open_spiel.python.algorithms import cfr # Initialize a poker game game = pyspiel.load_game("leduc_poker") state = game.new_initial_state() |

OpenSpiel is a library developed by Google DeepMind and includes implementations for multi game-theoretic algorithms including variants of CFR. The library has the potential to become the primary library for poker AI research for use in academia.

PyPokerEngine: Simulation Environment

|

1 2 3 4 5 6 7 |

from pypokerengine.api.game import setup_config, start_poker from pypokerengine.players import BasePokerPlayer class ResearchBot(BasePokerPlayer): def declare_action(self, valid_actions, hole_card, round_state): # Implement your strategy here return action, amount |

PokerRL: Reinforcement Learning Framework

PokerRL provides a route to apply reinforcement learning techniques in a poker environment while providing support for poker options and variations as well as configuration for your options for training.

Computational Demands and training methods

Modern poker AI will require many computational resources to develop to a trained model. As highlighted in record

- Libratus: in excess of 13 million core hours of super-computing clusters

- Pluribus: 12400 core hours for initial training, and live real-time computation

- Academic delivery: Typically somewhere 100-1000 GPU hours to get to something useful

To place things in context for doing academic research, universities can usually look at simpler implementations of poker:

- Leduc Poker: a very simple implementation of poker to meet the original purpose of the learning to implement CFR algorithms in general.

- Kuhn Poker: a very simple implementation to help people make sense of the general pieces.

- Heads-up Limit Hold’em: significantly simpler than no-limit games.

Sample Implementation Architecture

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

import numpy as np from typing import Dict, List, Tuple import pyspiel class EducationalPokerAI: def __init__(self, game_name: str = "leduc_poker"): self.game = pyspiel.load_game(game_name) self.cfr_solver = cfr.CFRSolver(self.game) self.training_iterations = 0 def train(self, iterations: int = 10000): """Train the AI using CFR algorithm""" for i in range(iterations): self.cfr_solver.evaluate_and_update_policy() if i % 1000 == 0: exploitability = self.calculate_exploitability() print(f"Iteration {i}: Exploitability = {exploitability}") self.training_iterations += iterations def get_strategy(self, state): """Get the current strategy for a given state""" return self.cfr_solver.average_policy() def calculate_exploitability(self) -> float: """Calculate how exploitable the current strategy is""" return cfr.exploitability(self.game, self.cfr_solver.average_policy()) |

Real-life example and case studies

Given the examples of academic and research experience

Poker AI has uses outside of games, for example:

- Cyber security: reasoning strategically defending a network

- Financial Markets: auction mechanisms, trading strategies

- Negotiation: situations with multiple parties negotiating to allocate resources

- Military Strategy: reasoning strategically under incomplete information and uncertainty

Academic value as part of the computer science curricula

Several leading universities, e.g., Carnegie Mellon, University of Alberta, MIT, have some type of poker AI project in the CS curricula. The research projects exposes students to:

- Algorithm Design: implementing and optimizing CFR algorithms.

- Game Theory: searching for Nash equilibria, reasoning strategically with graphs/networks.

- Machine Learning: to complement classical algorithms and produce added general intelligence – using neural networks.

- Software Engineering: this includes the development of complex systems and their many different components!

Expert and Industry Perspective

In an academic perspective

As Dr. Michael Bowling, of University of Alberta describes it, “Poker AI research pushes the boundaries of what’s possible in strategic reasoning under uncertainty. The techniques we develop have applications in cybersecurity, auctions, and any domain where you need to make decisions with incomplete information.”

Expert Player Recall

Recreation user and coach Darren Elias explained the educational component of poker: “Understanding GTO concepts through AI research has revolutionized how we think about poker strategy. Even if you never build a bot, studying these algorithms makes you a better strategic thinker.”

Industry Applications

Recent developments in poker AI have garnered interest from major tech players. Google DeepMind, Facebook AI Research, and others continue to support this area in their organization and maintain investment because of the implications in larger areas of AI.

Recent Developments in Academia (2020-2025)

The field is still developing quickly. There have been a few major developments, notably:

Enhanced CFR variants

- Neural Fictitious Self-Play (NFSP): The neural version of CFR was even able to deal more effectively with large state spaces

- Deep CFR with function approximation which reduced the memory requirements for the learning process

- Regret-based pruning methods that emphasized fast convergence in practice

Multi-Agent Learning

The research agenda has expanded from two-player to many-player and multi-agent domains with some identified applications of wider student/ researcher interest including:

- Auction design

- Resource allocation

- Interaction and collaboration with AI systems

Explainable AI in strategic settings

Finally, recent developments focused on explaining the decisions of poker AI are essential for education and eventual deployment in the real-world.

Future Directions and Learning Paths

For Future Researchers

Anyone student interested in game AI and poker AI in particular should consider the following:

- Training in strong mathematics for a foundation in game theory, probability, and optimization

- Experience with programming ideally in Python or C++ with familiarity with machine learning architecture

- Understanding of decision algorithms particularly CFR, MCTS, and Neural networks

- Experience implementing your own version of simulated poker in simplified form

What is the best order of learning to study poker AI?

- Start with Kuhn Poker: implement a simple CFR algorithm.

- Move on to simulating Leduc Poker: it is more complicated but still manageable.

- Explore the existing implementations such as OpenSpiel, PokerRL.

- Explore the possible additions AI can make with Neural networks of Deep CFR variants

- The study of applications beyond poker to other strategic agents

Conclusion on Educational Value

Poker AI applications represent one of the most intellectually engaging academic pursuits in computer science education. The opportunity to explore vigorous mathematical theory while addressing practical programming problems allows students to engage fully in the study of artificial intelligence, game theory, and software engineering.

While the capabilities of Libratus, DeepStack, and Pluribus provide evidence we have reached some incredible heights in relation to AI development, the greatest value of poker AI is not developing systems that take advantage of poker games, but understanding how strategic reasoning under uncertainty develops principled outcomes.

While we are all grappling with important challenges in cybersecurity, financial markets, and multi-agent systems, the strategies we have generated in our poker AI research rightly serve as valuable tools for considering real-world problems. For students and researchers, poker AI serves as a lower barrier to entry into some of the most sophisticated aspects of contemporary artificial intelligence.

The development going forward is to expand from poker AI to include the techniques generated there into broader domains while holding onto the academic value inherent to our poker AI research. If we can continually return to education, open-source transparency, responsible development, and be sensitive to the implications of people working with intelligent systems and strategic reasoning capabilities, then poker AI will have lasting significance.

References and Further Reading

- Brown, N., & Sandholm, T. (2019). Superhuman AI for multiplayer poker. Science, 365(6456), 885-890.

- Moravčík, M., et al. (2017). DeepStack: Expert-level artificial intelligence in heads-up no-limit poker. Science, 356(6337), 508-513.

- Steinberger, E. (2019). Single Deep Counterfactual Regret Minimization. arXiv preprint arXiv:1901.07621.

- Li, X., et al. (2020). Neural Fictitious Self-Play in Imperfect Information Games. Proceedings of the AAAI Conference on Artificial Intelligence.

- Lanctot, M., et al. (2019). OpenSpiel: A framework for reinforcement learning in games. arXiv preprint arXiv:1908.09453.

Frequently Asked Questions (FAQ)

Are poker bots illegal?

There is not a single correct answer. Automated bots on commercial online sites are usually prohibited by the commercial site’s terms of service and may also be against the local jurisdiction’s laws. These automated systems should only be used for education and research.

Why is poker AI different than chess AI?

Chess and Go are perfect-information games, meaning all pieces of the game are visible. Poker is an imperfect-information game, which has hidden cards, bluffing and modeling opponents, making poker strategy much closer to real-world decision making under uncertainty.

If I’d like to learn poker AI, where do I start?

Start with simple educational games: Kuhn Poker → Leduc Poker → basic implementation of CFR → larger frameworks, e.g. – OpenSpiel; this will give an accessible entry point to be able to study more complex systems, e.g. PokerRL, or Deep CFR.

How many computational resources are needed for training poker AI?

For typical academic prototypes, 100–1000 GPU hours is often sufficient. But state-of-the-art systems, e.g. Libratus or Pluribus, needed tens of thousands to millions of core hours on super computers.

Is there any value to studying poker AI if I am not going to build a bot?

For certain. If you understand CFR, GTO, and multi-agent reasoning, that knowledge can be applied to a range of application areas, such as cybersecurity, negotiations, and financial markets.

What poker AI research directions are interesting beyond 2025?

Areas that will be important are Explainable AI (XAI), large multi-agent learning, and more broadly applying CFR-based strategies in areas beyond poker.