What Is Exploitability in Poker Bots – And How to Reduce It?

Poker Bot Exploitability grows quietly – not in flames, nor in mistakes – rather in an unsettling quiet unease with small percentages: Several anomalies appear. The bot is executing properly. It bluffs at proper times. It value bets aggressively. And it folds when it is correct to fold but the action will hurt. After tens of thousands of hands, a trend develops: A very skilled player does not beat the computer consistently by playing better – they find vulnerabilities within the strategy: Small consistent holes in the armor of the strategy. Again, not by chance — by poker bot exploitability.

Poker Bot Exploitability: An Invisible Shadow of Unknown Measures

For many of the engineers working in the gray areas of AI poker, exploitability is not a measure – it’s a phantom. According to one author of the recent paper, it gives a mathematical way to quantify the average loss of a strategy when it is played by a perfect rational opponent – in other words, an opponent that knows the strategy’s weaknesses, and nothing else. For players that want to achieve GTO, the difference between how a strategy should be executed vs. how it is being executed is poker bot exploitability.

Let’s consider a strategy that slightly over-folds in a specific river position. Not a lot. Just a little more than the equilibrium suggests. A human might not catch the flaw. A poorly constructed bot might also miss the flaw. However, an extremely advanced AI, that has been optimized to take advantage of an opponent’s weakness will perceive it as an opportunity. The over-fold rate is where the opponent enters – a slight wound that with enough pressure can become a large cut.

Calculating Poker Bot Exploitability

One would think that these shortcomings would be easier to recognize. However, again, poker is a game of ghosts. The perfect response to an opponent is a ghost: theoretical, omniscient, and patient. When calculating the exploitability of a strategy, you are typically sampling the ghost. With LBR rollouts or deep Monte Carlo estimates. The authors of Robson are describing exploitability in terms of decimal places and decimal points. They describe measures such as millibigblinds per game (mbb/g). A strategy with a mbb/g exploitability of 1 is elite. In actuality, at this point, poker bot exploitability of approximately 1 mbb/g is generally considered elite. Although 9 spigots (or 10, 8, 2, or 5) are generally okay, 300 is a hole in the dam.

Although as of 2025, there is still no publically available poker bot that can play GTO-style poker at scale in 6-max NLH. “Heads up?” We are getting closer. However, the sheer number of decision making possibilities – the combinatorial explosion – is massive. Thus, programmers model, abstract, solve, re-solve, and continuously check. The gap is a measurement of poker bot exploitability at true scale.

A Slow Leak Emerges

Exploitability emerges quietly. However, it typically originates from the price of taking a shortcut: A hand grouping that categorizes subtle differences in holding combinations, or a bet abstraction that reduces the subtlety of judgment to reasonable forms. Frequently, it is the result of a function approximation error: A neural network learns to estimate expected value from millions of examples in a simulated environment, however, it fails when it encounters an edge case it has never encountered before. Sometimes, it is an engineering decision made under duress – a non-cryptographically secure pseudo-random number generator, a predictable timing pattern, or a sub-game that has been solved using assumptions that are no longer valid.

As he explained, “what makes these types of issues so interesting,” he said, “isn’t just that they exist, but that they resonate. One predictable river raise cannot cause damage. But, what if it is predictable and occurs regularly on common board configurations? The bot becomes transparent. Exploitable — increasing poker bot exploitability.”

Solutions to Poker Bot Exploitability

So, therefore, what is the remedy? There is none. Not exactly. However, there are several methods – either alone, or together — to reduce the possibility of exploitation.

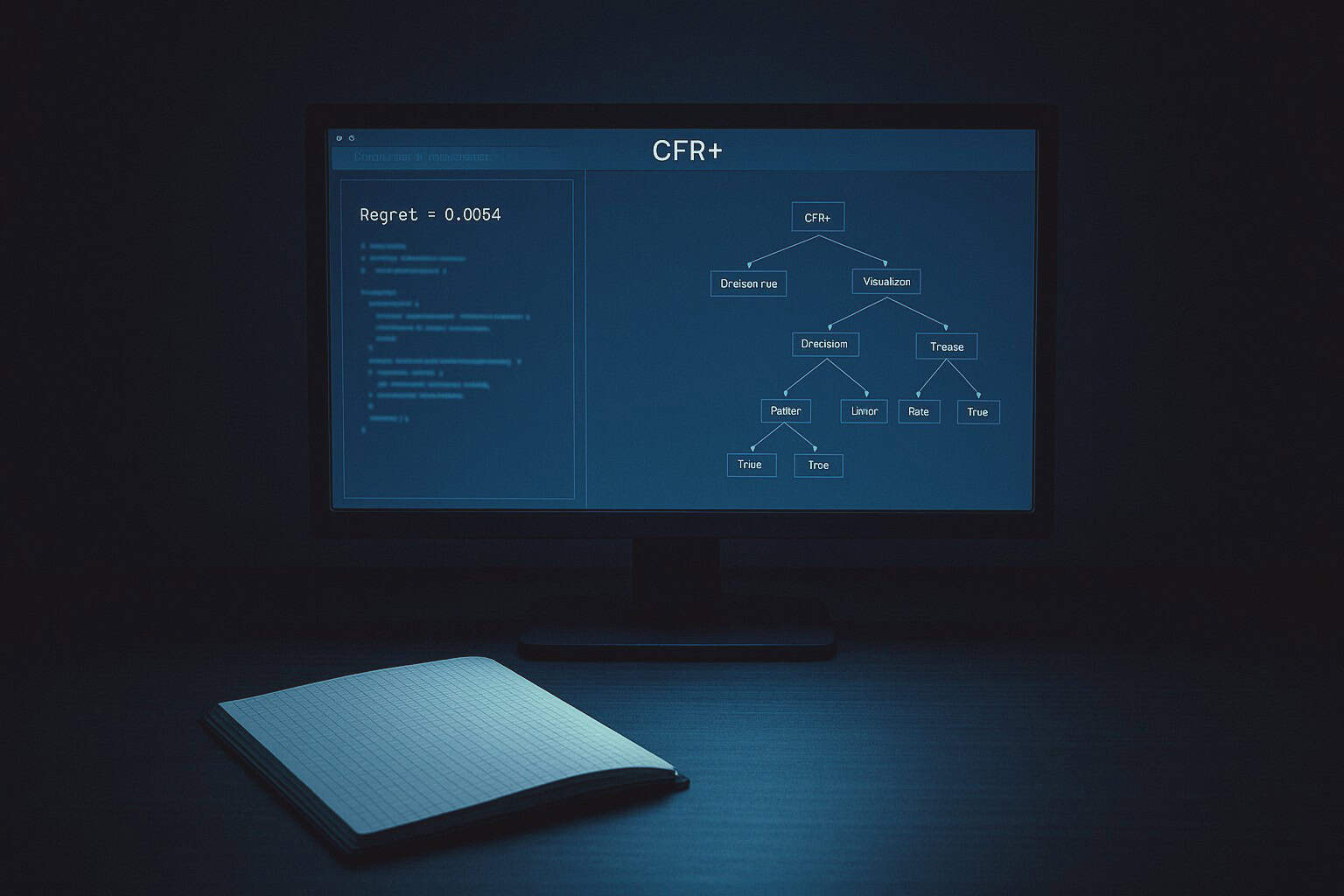

- CFR and associated algorithms: Counterfactual Regret Minimization and numerous variations of this type, including CFR+ (Counterfactual Regret Minimization +), DCFR+ (Deep Counterfactual Regret Minimization +), and Deep CFR (Deep Counterfactual Regret Minimization)–These are the basis of most AI poker strategies. These are iterative learning systems that allow the AI to repeatedly play itself until its regret decreases to zero. Nonetheless, CFR-type algorithms require millions — and in most cases, billions — of iterations to approach a minimization of exploitability.

- Safe Sub-Game Solving: This was the area in which the bots Libratus and DeepStack were successful. They did not rely upon their plans. At each level of the tree, they calculated, refined, and bound their risks. “Do not create a strategy that is more exploitable than your baseline” — is a motto of safe AI poker.

- Discipline in Randomness: Even this is hard. If your pseudo-random number generator (PRNG) is not cryptographically secure, or if your actions occur at a rhythmically repetitive rate, an aware opponent can deconstruct your reasoning. The best bots jitter — in both strategy and speed.

- Ongoing Stress Testing: Periodic LBR testing, adversarial self-play, and injecting random off-tree bets – these are all components of a comprehensive program to test the reliability of a bot. Bots do not grow in isolation — they grow through adversity.

The Continuing Tension

GTO is the goal, exploitation is the sirens song, and lower poker bot exploitability is the life jacket. Pure GTO play is invulnerable, but unresponsive — it allows weaker opponents to keep their money. On the contrary, exploitation takes money from sharks, but keeps money for fish intact. So, almost every advanced bot contains a mix of the two approaches: A low-exploitability core, with opportunistic overlays — with the leak continually monitored by the sysop.

Therefore, the tension continues. Each time someone is exploited increases the risk. Because each abstraction represents a reduction in the complexity of the real world. The primary reason for this is that poker — like imperfect information games — never provides clear, immediate, and complete feedback — only noisy, delayed signals.

We continue to ask: How exploitable is this strategy? What are the probabilities of finding this weakness, and who will find it? Am I able to afford the discrepancy? Should I combine this hand classification with that one? Finally, there is a deeper question: How far are we, really, from solving the game?

Perhaps, we are not as close as we thought. But, certainly closer than we were yesterday.