How to Train a Poker Bot with Hand History Logs

The idea behind poker bots is that they have no interest in your “bad beat” stories. When the beat happens anyway, they don’t like the beat either. What they care about is the log file. The plaintext timestamped table listing the stack depth and dollar and cents details on each player’s account of what happened is the log file.

You’ve probably spent many late nights watching them, the files the vast majority of most players save to double up again over an iffy river call. I’ve done it myself. Watching them until the hands blend together until “UTG raises to $3” seems less an action than a crack in the matrix.

Somewhere in this mess, a poker bot will learn. This is where poker bot hand history training begins — converting raw, unorganized logs into the base strategic knowledge of an AI.

Cleaning and Parsing Poker Hand History Logs

Poker hand history logs are chaotic and disorganized. PokerStars, GGPoker, and WSOP each have their own quirks. Blinds may appear at the beginning of the hand or three lines later, sometimes in a format that appears to be from 2004 (because it is).

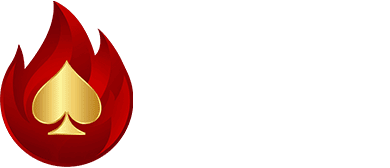

First, you need to clean up the logs. Standardize the stack sizes in big blinds. Use a standard format to record the actions taken by the players. Convert card values to machine readable binary vector representations. The AI does not “see” Ace of Spades — it sees a 1 in position 12 of a 52 bit array. Romantic, I know.

The cleaner you are when you parse the logs, the less junk is in the bot’s mind. I once spent three days finding a bug in my parser that caused phantom raises from the small blind to be reported as half of the time. The bot was hero folding kings before the flop. Humbling? Yes. Educational? More so.

Converting Hand History Logs into Poker Bot Training Data

This is the magic: A poker bot doesn’t need your impassioned strategic pep talk to succeed. It needs a stream of structured state-action pairs.

You can break down each hand based on the number of decision points: pot size, position, stack size, board texture, prior betting. Add some fake items such as pot odds, implied odds, SPR, fold equity. Add some go-to words: fold, call, raise, with the different types of bets.

When the bot is using supervised learning, the bot simply follows the path laid out by the other players. Behavioral Cloning. Thousands, maybe even millions, of decision-making opportunities from strong players. Teaching a parrot to talk is similar to teaching a parrot to make decisions, except the parrot may occasionally 3-bet light from the cut-off. The extraction of structured actions from logs is a critical aspect of poker bot hand history training, and transforms those actions into executable decision models.

When the bot is using reinforcement learning, hand histories are a lot like a mirror. While the hand histories may provide guidance to fine-tune behavior relative to real-world phenomenon, they’re not the primary source of fuel (self-play generates much more diversified data).

Using CFR and Deep Learning in Poker Bot Training

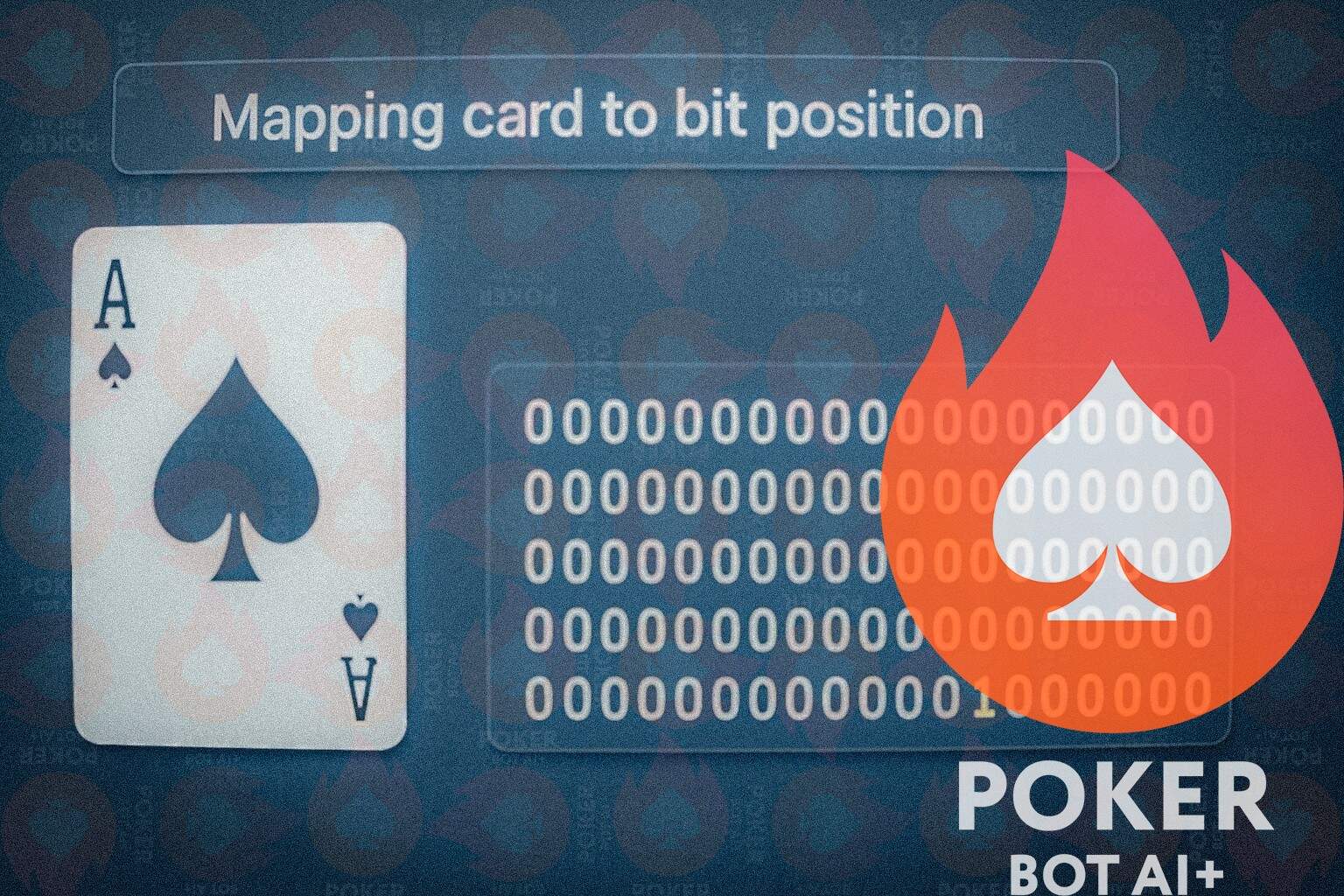

Counterfactual Regret Minimization (CFR) remains the reigning champion. The bot makes a decision as if it were evaluating every possible decision point, calculating the regret for not making every possible decision, and gradually adjusting its decision-making process. Do this a billion times and you have Game Theory Optimal (GTO) play.

Deep reinforcement learning is required to wrestle with the more ambiguous aspects of the game. DeepStack evaluated the future game with a neural network, while Pluribus only needed to evaluate the immediate future game in order to adapt to the chaos of six-max. The strongest poker AI results often come from hybrid bots — GTO at the center, and exploitable at the edges.

Your hand histories are used for calibration purposes. They illustrate what real players typically do, allowing the bot to take conservative approaches when there is money on the line.

Mistakes, Bugs, and the Human Factor

While training a bot is primarily a matter of applying numbers, it also involves debugging the math.

I have even had bots fold pocket aces due to an offsuit rag being mislabeled as such in the feature vector. I have had bots attempt to bluff shove in limit Hold’em because the bet-size normalizer was broken.

Each parsing and feature engineering mistake compounds. Your poker AI algorithms are only as smart as the data you give them. Garbage in, garbage AI out.

That’s the thing: hand histories are biased towards the players that generated them: overfold, under-bluff, weird lines. If you blindly train a bot on hand histories, your bot will learn those idiosyncrasies. That’s sometimes good (exploitative strength vs a particular pool), sometimes a pitfall.

Testing and Evaluating a Poker Bot After Training

After you’ve completed the training process, you’ll have a model. However, you won’t have a completed bot yet.

You want to create an interface that provides the model with the current game state at the table; your betting strategy to handle the difficult situations outside of the training set; and some fallback options so that when the model is uncertain about a decision, it will always default to a safe approach.

The resulting product is more than just a mathematical concept. It’s software. A poker AI project that you can test, evaluate, and possibly even compete against.

In research communities, you use AIVAT variance reduction to estimate a bot’s win rate. In a private setting, you simply run the bot for 100,000 hands and hope that the graph trends upward.

Watching the Bot Play

This is where it becomes entertaining.

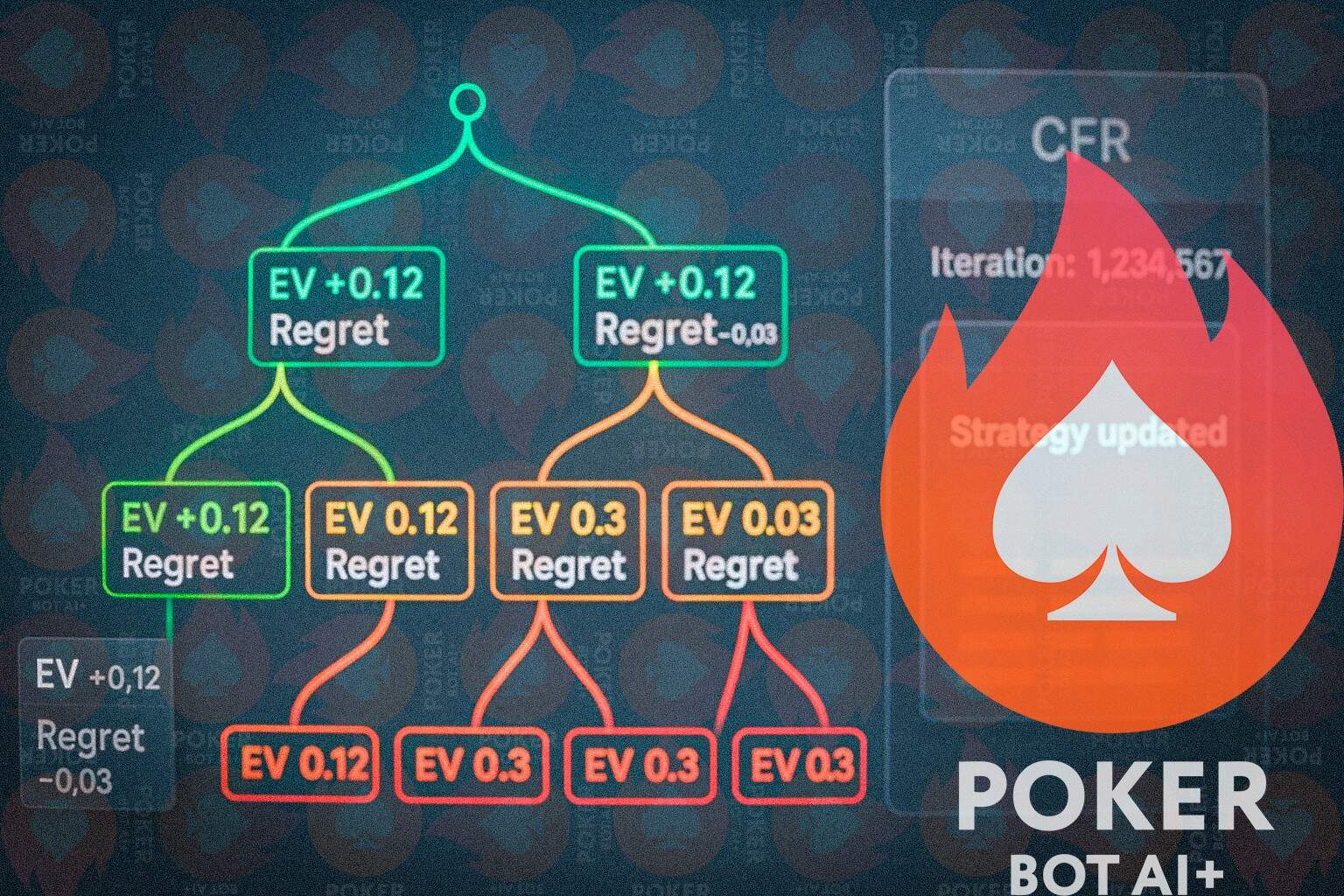

You’re watching the bot perform a bizarre action — checking-raising a dry flop with third pair. You check the log. The bot is exploiting a hidden tendency it found deep within the dataset: this opponent type overfolds aggressively to aggression in multi-way pots.

You watch the bot slow-play aces as you’ve never taught it to. You watch it make hero calls in a spot you would have folded in. There are moments of brilliance, and moments of utter failure.

And that’s the point. The bot is naturally inclined to make every decision found in the hand histories it has learned from. This is essentially the definition of poker bot hand history training — transforming strategies developed from tens of thousands of logged hands into playable decisions at the table. The patterns of thousands of players, normalized, weighted, converted into probability estimates.

Not perfect. But neither are we.